Stats Facts

Some stats facts: elephants and pointing, Reading Literary Fiction Improves Theory of Mind, Pies and dougnuts, statistical graphs.

Stats fact

Pies and doughnuts are off the menu!

Pie charts and their close cousins, doughnut charts, are part of our staple diet of graphs of data – we find them on blogs, in newspapers, in textbooks and in presentations at work. But one way to annoy your unassuming, mild-mannered local statistician is to ask for a pie chart (or worse, a Krispy Kreme).

What is the problem with pies and doughnuts?

Graphs of data should tell us about the quantities involved and help us to make accurate comparisons between these quantities. The quantities in each category should be easy to estimate and the category labels should be clear.

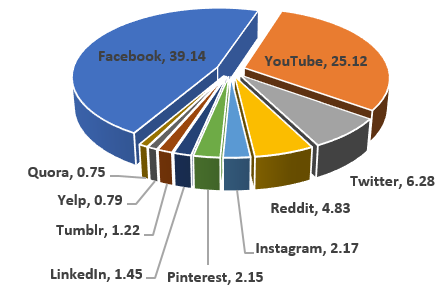

Pies and doughnuts fail because:

- Quantity is represented by slices; humans aren’t particularly good at estimating quantity from angles, which is the skill needed.

- Matching the labels and the slices can be hard work.

- Small percentages (which might be important) are tricky to show.

Four sure signs that your pie or doughnut is failing:

- You need to add the percentage to every slice.

- You need to directly label every slice.

- You have run out of colours for the slices.

- You decide to explode the chart to solve your first three problems.

A bit like this one:

(Market share of visits to social network sites in November, 2017)

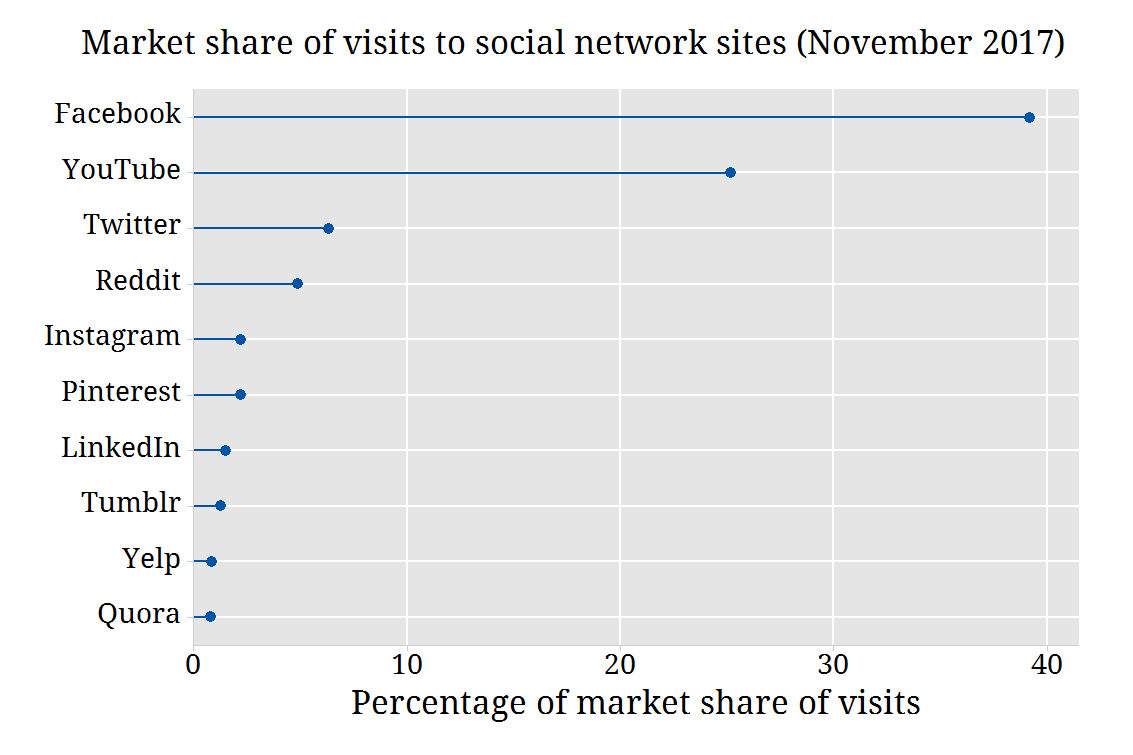

Here's an alternative graph of the data:

Some people might say this graph is boring, but as Edward Tufte warns in his book Envisioning information:

"Cosmetic decoration, which frequently distorts the data, will never salvage an underlying lack of content."

Why is this chart so much better?

- We can estimate the quantities – the gridlines are helpful here.

- We can immediately see the order of the sites.

- We can accurately compare the different sites.

The bottom line:

Stats fact 2

Can reading literary fiction really make people better at empathy?

That’s what a recent study claims to show.

“Reading Literary Fiction Improves Theory of Mind ”

appeared in Science this month.

There were five different experiments with people randomised to either two or three groups. One group was assigned to read literary fiction – works chosen by literary prize judges, and including authors such as Jane Austen and Anton Chekhov. The other groups varied, and were assigned to read popular fiction, non-fiction or nothing at all. The participant completed the “Reading the Mind in the Eyes Test” (RMET), which was designed by Simon Baron-Cohen (cousin of Sacha Baron Cohen of “Ali-G”, “Borat” and other fame ... but I digress).

You can do the RMET yourself

The RMET is supposed to measure your capacity for understanding other people’s mental states. You look at 36 different images of a pair of eyes, and have to say what the eyes are showing (for example: impatient? aghast? irritated? reflective?): four choices for each pair of eyes, one of which is deemed to be correct.

The groups that were allocated to read literary fiction did better than the groups allocated to read nothing, or such riveting pieces of non-fiction as “How the potato changed the world”.

In four experiments, the comparison of groups was “statistically significant”; on the RMET test the difference in means was between 1 and 3 (pairs of eyes); to put that in context, we are told that scores between 22 and 30 (out of 36) are common.

What is really going on here? What should we make of this? Can it really be true that reading a bit of Jane Austen makes the reader more understanding of others, and insightful?

There is a crucial aspect of the experiments that needs highlighting. Reading the research article itself, I was wondering … how long did the intervention go for? Did they read the allocated works for days? Weeks? Months? The use of the word “temporarily” in the abstract, and “short-term” in the text, gives a hint. And … who were the people recruited for the experiments? Were they the usual suspects, university students? But I had to rely on media sources to discover that the subjects were recruited from Amazon’s “Mechanical Turk” service, which advertises small jobs to be completed for a few cents or dollars. They were paid $2 or $3 each to read for a few minutes; see the New York Times blog post.

Neither of these details is in the Science article. So the subjects were from a highly selective group of the population (would you sign up?) which may or may not be a problem, and they read for a few minutes, according to the New York Times’ account.

Should we trust these results? Although the effects were small, they were consistent in their direction, there were five of them, and they were independent, randomised experiments. At face value, this is evidence of a real effect.

But it is all very brief and may be ephemeral. It seems possible to me that the data are consistent with a kind of “warming up” effect, similar to stretching and jogging before more vigorous exercise. The more literary works may focus the mind on its potential for empathy, causing the performance on an empathy test immediately following to be slightly better than otherwise, on average. Cricketers catch balls before fielding; tennis players warm up by hitting against their opponent for a few minutes, a student doing an oral exam in French may listen to some spoken French immediately beforehand. Why? Because it “tunes” and focuses the body and mind to the task immediately following. If the pre-task activity is not as relevant, the warm-up effect does not occur. This study may be observing this effect, and no more.

Is this effect worth having?

IS IT A RINGING ENDORSEMENT FOR READING LITERARY FICTION?

Well, the experiments, taken together, suggest there’s no harm.

But I think it’s a stretch to say that reading a few minutes of any text can really make a person more understanding and insightful over a long period of time, and the authors do suggest this: “reading literary fiction may lead to stable improvements”. Put it this way. If, instead of doing the RMET straight after the required reading, the test was done six months later, do you think the results would show a benefit of the literary fiction over the non-fiction?

I would feel a lot less uneasy about the article if its title was “Reading Literary Fiction Improves Theory of Mind (for 15 minutes, anyway)”.

NOW BACK TO SENSE AND SENSIBILITY.

STATS FACT 3

Can an elephant really interpret human pointing?

WHAT’S THE EVIDENCE?

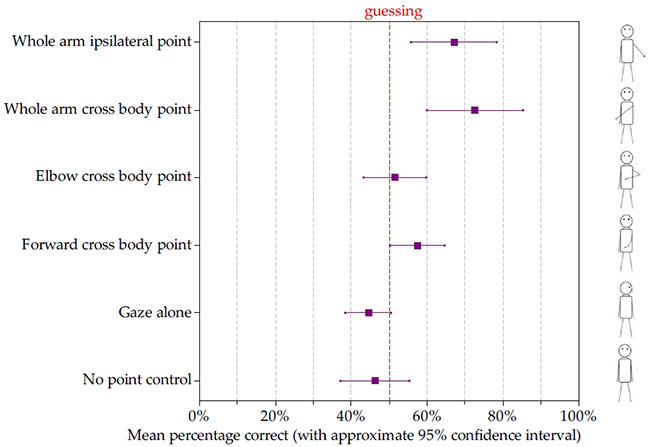

You might have seen this study, by Anna Smet and Richard Byrne, in the news. Captive African elephants were tested to see if they could interpret human pointing to find hidden food. The elephants had to choose between two containers of food – so there was a 50% chance they would be right if they were guessing.

The elephants were tested with different ways of “pointing”, including the ones in this diagram:

One way included only using the eyes (gaze only). The experimenters also included a condition with no pointing at all. They called this “no point control”.

WHAT WERE THE RESULTS LIKE?

The elephants did a number of trials with each different way of pointing, so the researchers worked out the percentage of times that an elephant was correct. If elephants were guessing, the percentage correct would be 50%.

Our graph of the study results shows the mean percentage correct for the elephants for each type of pointing. The lines from the squares are some more technical detail; they show uncertainty.

WHAT DOES THE GRAPH TELLS US?

- The results for no pointing are close to the red line – the mean percentage we would expect if the elephants were guessing.

- The results for subtle ways of pointing – forward cross body and elbow cross body – are also close to the red line.

- The results for clearer ways of pointing are to the right of the red line – the elephants are doing better than chance guessing.

The researchers interpreted this pattern of results to mean that the elephants understood the experimenter’s communicative intent.

HOW CONVINCING IS THE EVIDENCE?

This looks like a good study. It’s a bit more complicated that my description here, but you can check it out yourself, and even look at videos of the experiment. Here’s just a few of the reasons:

- A good feature of the study was that the order of testing of the elephants with the different ways of pointing was randomized. If the researchers hadn’t done this, we might not be able to untangle possible learning effects over time from the effects of the different ways of pointing.

- The results are coherent; the elephants did no better than chance for control and the more definitive the point the better the elephants did.

- The researchers thought very carefully about a lot of fine detail. This type of study is tricky – there could be many subtle ways that the elephants could be given unintentional cues. For example, the elephant’s handler knew where the food was. Another concern is where the researcher stood: between the containers? next to the container with the food? next to the empty container?

HOW DOES THIS COMPARE TO BABIES?

On average, elephants were correct 68% of the time when a whole arm ipsilateral point was used. (“Ipsilateral” means “on the same side of the body”.) The researchers tell us that one-year-old children get 73% right on average on this type of task.

WHAT’S THE THEORY?

The researchers described a couple of different explanations of why elephants might be able to interpret pointing as communication.

- One theory is that this ability has evolved as elephants became domesticated. The evidence is that domesticated animals do better at the pointing task than non-domesticated animals.

- An alternative view is that animals already sensitised to cues from humans tend to be more suitable for domestication.